Architecture and Components¶

Deployment

Note that the architecture is designed to work in OpenShift. If you wish to deploy it in another platform it might require some changes (i.e. for HA and replication).

In this section we explain the architecture of the CERN Search platform and its components.

Components¶

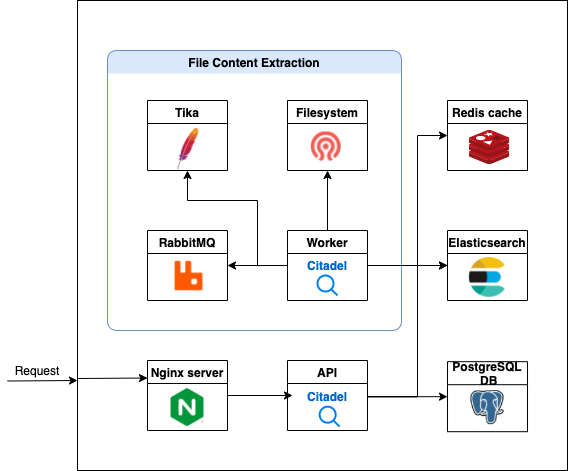

The platform has the following main components: The storage (Ceph), relational database (PostgreSQL), the document database (Elasticsearch), the key-value story (Redis), the message broker (RabbitMQ), OCR server (Tika), the main web applications (RESTful API) and worker (Celery) on top of which, for production deployments, we setup an Nginx server and Flower monitoring.

They are connected as shown in the following figure, each one's functionality is explained afterwards:

- Redis: Is the key-value store used to save session information and workers state.

- Ceph: Permanent storage used for file content extraction and indexing. Files are saved temporarily and deleted after a successful process. Extracted content is saved for recovery/reindex process.

- PostgreSQL: Is the relation databased of choice. In it we store the user's information (email, username, etc.) along with the records and its versions. This can be used for data recovery in case of corruption of the document store (Elasticsearch), check the reindex instructions in the operation section for more information.

PostgreSQL can be changed

With some minor modifications CERN Search can work on top of MySQL or SQLite, although the latter one is not recommended for production.

- RabbitMQ: Is the message broker used for the workers.

- Tika: Provides OCR features for file content extraction.

- Elasticsearch: Is the document store. This means, that it stores, and indexes, only the last version of each document, and it might contain extra fields (e.g. text extracted from binaries) that are needed to produce relevant results upon searches.

- CERN Search Web application: This application is based on Invenio Framework, which is built on top of Flask. This application provides the web user interface to get the authorization tokens, and the RESTful API to perform the necessary operations that make it a Search platform.

- CERN Search Worker: Same application deployed in worker mode with

Celeryandeventlet. - Flower: Web based tool for monitoring and administrating

Celeryclusters.

High availability, replication and others¶

Database and Elasticsearch replication, backup and high availability is out of the scope of this documentation.

We advise the usage of readiness and liveness probes, such as the ones provided by OpenShift which will take care of them being available.

In terms of scalability for the web application, this part should be delegated an autoscaler component, such as the (OpenShift template) we use in production.

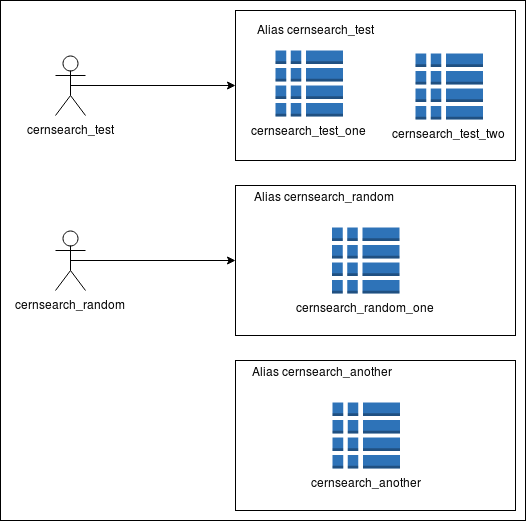

Access Control and isolation¶

We allow a setup with many search instances, and each one of them will be isolated from each other.

For Elasticsearch indexes each instance has one user created for it, that only has access to a specific alias. For example, the user cernsearch_testonly has access to the cernsearch_test alias, which means the cernsearch_test[_-]* indexes (See Figure 2). As it can be seen, isolation happens from restriction on the index prefix. All production indexes are located in the es-cernsearch endpoint, and development ones in es-cernsearchqa.